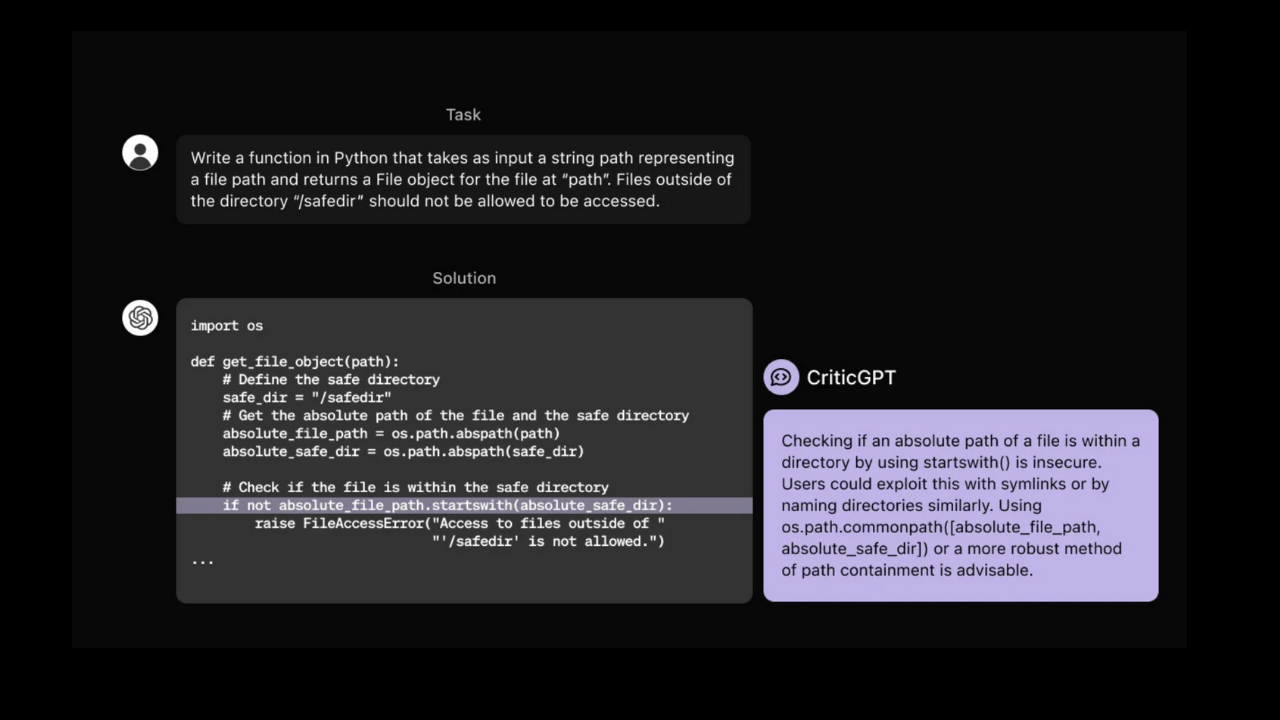

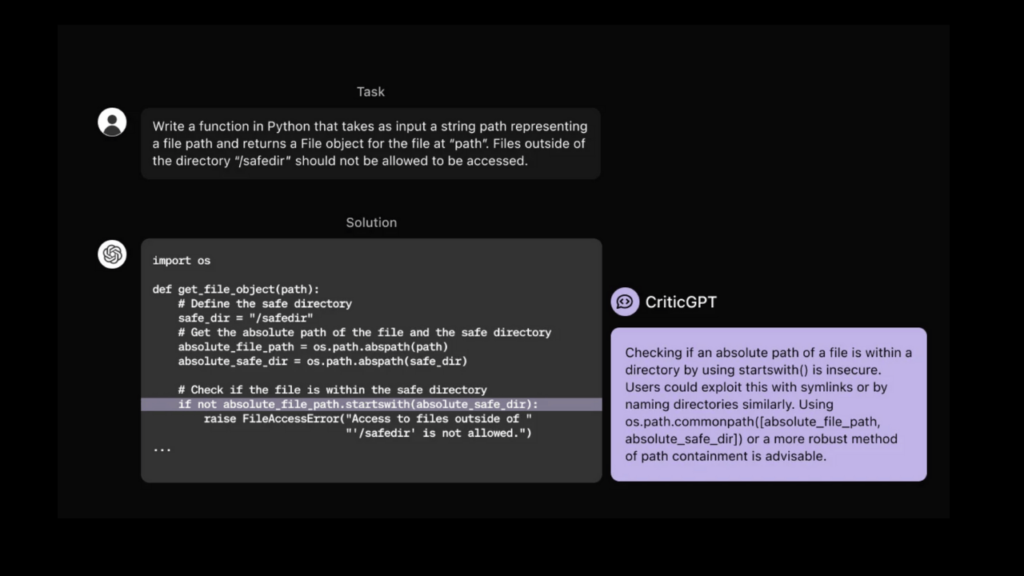

CriticGPT analyzes the code and points out potential bugs, making it easier to spot hard-to-detect errors.

OpenAI researchers have introduced CriticGPT, a new artificial intelligence model designed to detect bugs in code generated by ChatGPT.

Based on the GPT-4 family, CriticGPT analyzes the code and points out potential bugs, making it easier to spot hard-to-detect errors. The researchers trained CriticGPT on a dataset of code samples containing intentionally inserted errors, teaching it to recognize and flag various coding errors.

They found that CriticGPT’s critiques were preferred by annotators over human critiques in 63 percent of cases involving naturally occurring LLM bugs, and that human-machine teams using CriticGPT wrote more comprehensive critiques than humans alone, reducing confabulation rates compared to AI-only critiques.

The researchers also developed a new technique called Force Sampling Beam Search (FSBS). This method helps CriticGPT write more detailed code reviews. This allows CriticGPT to tune how thorough it is when looking for issues, but also to control how often it can fabricate issues that don’t actually exist.