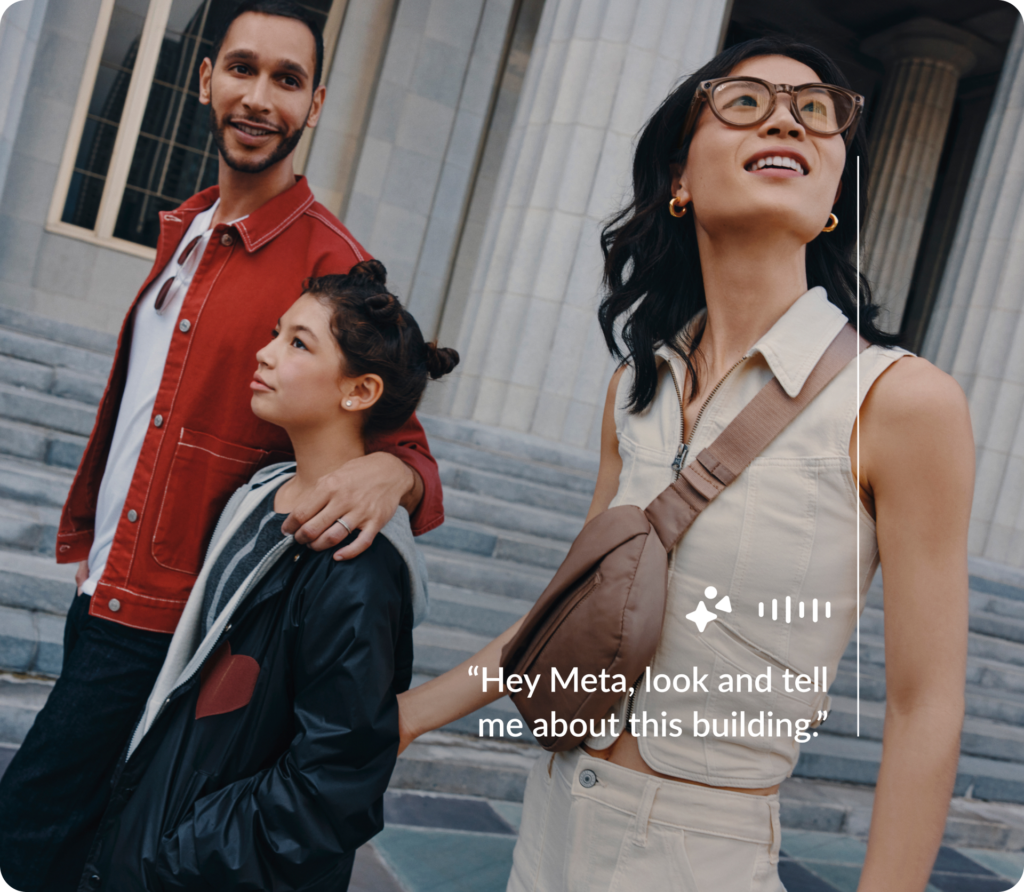

Live AI allows you to chat naturally with Meta’s AI assistant while constantly viewing your surroundings.

Meta announced the addition of three new features to its Ray-Ban smart glasses: live AI, live translation and Shazam. Live AI and live translation are only available to members of Meta’s Early Access Program, while Shazam support is now available to all users in the US and Canada.

Live AI and live translation were actually first introduced at Meta Connect 2024 earlier this year. Live AI allows you to converse naturally with Meta’s AI assistant while continuously viewing your surroundings. According to Meta, users will be able to use live AI for about 30 minutes at a time on a full charge.

The live translation feature allows the glasses to translate speech in real time between English and Spanish, French or Italian. You can choose to hear the translations on the glasses themselves or view the transcripts on your phone. To use the feature, you need to download language pairs in advance. You also need to specify which language you speak and which language the person you are speaking speaks.

In addition, Shazam support has a simple working principle. To benefit from the Shazam feature, just ask Meta’s artificial intelligence what the song you hear is. Meta CEO Mark Zuckerberg’s demonstration also highlights this simple use.

To actively use the features, your glasses must be running v11 software and Meta View app version v196. You will also need to apply for the Early Access Program. Meta’s new additions to Ray-Ban smart glasses challenge ChatGPT’s Advanced Voice Mode with Sight and Google’s Project Astra.